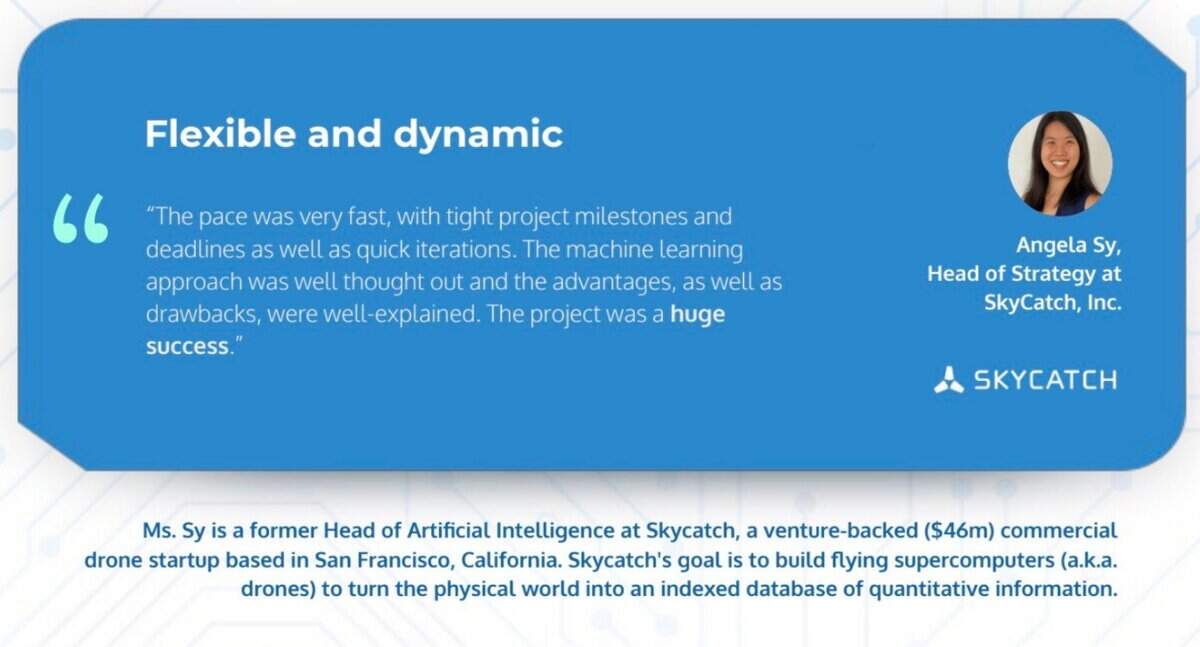

Drones are revolutionizing the way construction sites are monitored, managed, and optimized. In collaboration with SkyCatch, a San Francisco-based drone technology company, DevsData LLC developed AI-powered computer vision software to bring real-time activity detection to complex industrial environments. The project pushed the boundaries of deep learning, object tracking, and high-speed data processing in real-world conditions.

SkyCatch, Inc. is a drone technology company, headquartered in San Francisco, that builds AI-powered data solutions for the construction, mining, and energy industries. Its platform combines drone imagery, 3D modeling, and computer vision to support real-time site monitoring and informed decision-making in complex industrial settings. With a team of 42 employees and annual revenue of $6.3 million, the company runs an efficient organizational structure while providing advanced technology infrastructure to clients operating in demanding environments.

SkyCatch solutions are used to track construction progress, detect on-site activity, and automate reporting processes. The company maintains a strong focus on AI and automation, continuously evolving its product capabilities to meet the growing demand for efficiency and real-time data integration in industrial operations.

SkyCatch operates in sectors where precision, speed, and reliability are critical. Their clients, primarily in construction and mining, depend on accurate, real-time data to monitor active sites, assess safety risks, and make time-sensitive operational decisions. Traditional manual inspection methods are too slow and prone to error in such environments, creating an urgent need for automation powered by AI. Delays or inaccuracies in data capture can directly affect project timelines, compliance, and operational costs, making real-time intelligence not just a technical goal but a business imperative.

The core challenge was to develop a computer vision system capable of detecting specific equipment and activities from drone video streams under varying lighting, movement, and site conditions.

The solution had to go beyond simple object detection. SkyCatch required a model that could interpret scenes, track movement across frames, and recognize complex activities such as equipment operation and interaction patterns on active sites. The model also had to work reliably across varied terrains and integrate seamlessly with existing drone hardware and data workflows. Because these environments are high-risk and capital-intensive, any AI solution had to demonstrate not only technical accuracy but also operational reliability under field conditions.

Deployment was another key concern. The system had to be lightweight, scalable, and cloud-optimized, with minimal latency. This was essential to ensure rapid insights for decision-makers, enabling timely interventions that could reduce safety incidents or avoid costly downtime. SkyCatch also required flexibility through containerized deployment and cloud-based training, supporting scalability across global client operations. In short, the technical challenge was tightly linked to business outcomes. Speed, reliability, and scalability were all crucial for maintaining client trust and staying competitive in a fast-moving industry.

SkyCatch approached the project with clearly defined technical and operational goals. The primary objective was to build a robust AI-based system capable of detecting and interpreting construction site activities from drone video footage in real time. Accuracy and speed were equally important; false positives or delays in reporting could undermine the system’s usefulness in live industrial environments.

Beyond activity detection, SkyCatch expected the solution to integrate seamlessly into its existing drone data pipeline, enabling automatic analysis without requiring manual review. The system needed to be adaptable to varying terrains, camera angles, and environmental conditions, and perform consistently across different sites. Finally, scalability was a key requirement: the models had to be optimized for deployment across cloud-based infrastructure, allowing efficient rollout across multiple client operations.

To address these requirements, we began by designing a multi-stage computer vision pipeline tailored specifically to real-world drone footage. Our initial experimentation focused on object detection using two types of deep learning models: RCNN (Region-based Convolutional Neural Network) and its faster successor, Faster RCNN. Both models are designed to locate and classify objects within images, and comparing them allowed us to evaluate precision and performance across varied datasets and visual conditions.

Building on insights from a previous project involving human detection software for a defense firm, involving human detection in video streams, we ultimately adopted a YOLO-based architecture (“You Only Look Once”). Unlike other models that analyze images in parts, YOLO processes an entire frame in a single pass, enabling real-time detection of multiple objects simultaneously. This made it well-suited for the time-sensitive requirements of construction site monitoring, where speed is as critical as accuracy.

To enable the system to recognize not just objects but also actions and sequences, such as machinery movement or equipment interaction, we incorporated LSTM (Long Short-Term Memory) layers. These specialized components of neural networks are designed to analyze patterns over time, making it possible to understand behavior across multiple frames of video rather than treating each frame in isolation.

Our development process began with data collection and preparation. We worked with both client-provided drone footage and supplemental video data sourced from public platforms to ensure a diverse and representative training set. Frames were sampled at a 1Hz frequency to create datasets suitable for both object detection and temporal modeling tasks.

The deep learning models were developed using Keras with TensorFlow as the backend, trained on AWS p2.xlarge instances for efficient scalability. The RCNN and Faster RCNN models were trained to establish baselines, while the YOLO-LSTM model was fine-tuned for real-time deployment. Training and testing were conducted on hold-out sets to validate accuracy using mean Intersection over Union (IoU) as the primary metric.

Deployment was handled via Docker containers, enabling consistent environments across development, testing, and production. This containerized approach simplified integration with SkyCatch’s existing infrastructure and made it possible to replicate the solution across client-specific cloud setups.

The final system supported real-time object and activity detection, met performance benchmarks, and was delivered with documentation and support for further in-house scaling.

The final solution enabled SkyCatch to perform real-time detection and classification of construction site activities directly from drone video feeds. The YOLO-LSTM model delivered both speed and accuracy, meeting the technical requirements for live deployment in operational environments. The system successfully identified key site activities, such as equipment movement and task execution, with a high degree of precision across varied terrain and conditions.

The model achieved a mean IoU of 87.5%, with inference times under 250ms per frame, enabling truly near-real-time analysis. This latency aligns with performance standards for spatiotemporal models used in activity recognition, which often operate in the 200–300 ms range due to the added complexity of sequential frame analysis. Integration with SkyCatch’s existing infrastructure was completed without disruption, aided by containerized deployment and cloud-based scalability.

Manual review time for video footage was reduced by over 70%, significantly accelerating reporting cycles and improving on-site decision-making. The architecture was designed to support scalable rollout across multiple environments, allowing for efficient extension as needed.

The delivered solution reduced the need for manual review, accelerated reporting cycles, and enhanced the quality of insights available to on-site teams. Following deployment, internal teams reported improved situational awareness, faster anomaly detection, and smoother coordination across project phases.

| Category | Detail |

|---|---|

| Client | SkyCatch, Inc., a drone tech company based in San Francisco with a focus on the construction, mining, and energy sectors |

| Challenge | Needed real-time activity detection from drone video in diverse environments, with minimal latency and high model accuracy |

| Goals | Build a scalable, cloud-ready AI system for scene interpretation and equipment activity recognition in real time |

| Tech Stack | Keras, TensorFlow, AWS p2.xlarge, Docker, RCNN, Faster RCNN, YOLO with LSTM extensions |

| Key Features | Object tracking, temporal activity detection, containerized deployment, seamless integration with drone data pipeline |

| Deployment | Docker-based solution optimized for cloud infrastructure, enabling smooth rollout across client environments |

| Outcome | Real-time detection system successfully integrated, reduced manual review, improved reporting speed, and enhanced site visibility |

| Impact | Increased situational awareness, faster anomaly detection, and improved coordination across construction projects |

With a functional AI pipeline now embedded into their platform, SkyCatch is exploring new ways to extend activity recognition beyond construction into other industrial sectors such as mining and energy. Future development will focus on expanding the range of detectable activities, incorporating more advanced scene understanding, and refining the system’s performance in low-visibility conditions.

Collaboration is set to continue with an emphasis on improving model generalization and integrating additional data sources such as LiDAR and thermal imagery. There are also plans to enhance post-processing analytics, providing clients with raw detection data and actionable recommendations. The partnership is evolving into an ongoing R&D effort focused on long-term innovation and product advancement.

Looking to integrate real-time video intelligence into your operations? Leverage our experience in developing high-performance computer vision systems. Get in touch to discuss how we can help you build tailored AI solutions that understand the world in motion. Contact DevsData LLC today at general@devsdata.com or head to www.devsdata.com.

DevsData – your premium technology partner

DevsData is a boutique tech recruitment and software agency. Develop your software project with veteran engineers or scale up an in-house tech team of developers with relevant industry experience.

Free consultation with a software expert

🎧 Schedule a meeting

FEATURED IN

DevsData LLC is truly exceptional – their backend developers are some of the best I’ve ever worked with.”

Nicholas Johnson

Mentor at YC, serial entrepreneur

Build your project with our veteran developers

Build your project with our veteran developers

Explore the benefits of technology recruitment and tailor-made software

Explore the benefits of technology recruitment and tailor-made software

Learn how to source skilled and experienced software developers

Learn how to source skilled and experienced software developers

Categories: Big data, data analytics | Software and technology | IT recruitment blog | IT in Poland | Content hub (blog)